Supercharging LLMs with Search

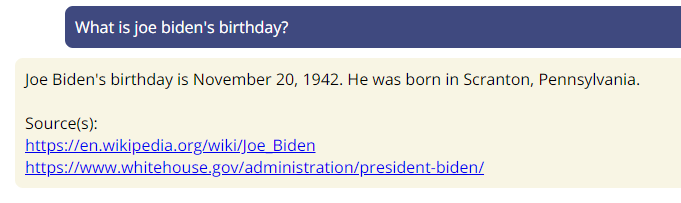

One of the problems inherent to most large language models is their lack of up-to-date information. Large language models are often trained on datasets that are months, or even years old.

In this tutorial, we show you how you can use PhaseLLM to supercharge your LLM chatbot answers by providing it with context from the web.

Check out the demo video and code walk through below, or skip it and read the written version here. The full demo application is open source, and available here.

The Pieces

All you need are three classes from the PhaseLLM library, along with some code and prompts to glue them together.

Starting with the classes you need:

from phasellm.llms import ClaudeWrapper, ChatBot

from phasellm.agents import WebSearchAgentWe choose to use the ClaudeWrapper because Anthropic's Claude model has a large context length (100,000 tokens). This will improve our results because our web search agent will be adding a lot of tokens to the context.

The WebSearchAgent uses the WebpageAgent under the hood. It is responsible for scraping web page content for each URL returned by your search engine of choice. As of the writing of this tutorial (phasellm version 0.0.15), the WebSearchAgent supports the Google and Brave search engines.

Beware of using the Brave search engine, as their terms of service currently prohibit performing inference on their search engine results.

Instantiate the classes you imported. You will need to provide an Anthropic API key for the ClaudeWrapper, and a Google or Brave API key for the WebSearchAgent. In this case, we will use the Google search engine, so we will provide a Google Search API key. You can get one here.

# Instantiate the ClaudeWrapper

llm = ClaudeWrapper("YOUR_ANTHROPIC_API_KEY")

# Instantiate the WebSearchAgent

web_search_agent = WebSearchAgent("YOUR_GOOGLE_SEARCH_API_KEY")

# Instantiate the ChatBot

chatbot = ChatBot(claude, web_search_agent)Putting it Together

Here is the general flow of the code that glues the pieces together:

-

Get a prompt from the user.

user_prompt = "What is the best machine learning framework in 2023? -

Submit a chat message on behalf of the user which asks the LLM to come up with a Google Search query to help in answering the user's question. This is helpful for when the user's prompt does not contain a good search query.

query = CHATBOT.chat( f'Come up with a google search query that will provide more information to help answer the question: ' f'"{message}". Respond with only the query.' ) -

Search Google with the WebSearchAgent and get the top 2 results. Note that you need to set up a "Custom Search Engine" with Google, and provide the ID of that search engine to the WebSearchAgent. You can find the documentation for that here.

# Note that num is a parameter specific to Google's API. results = web_search_agent.search_google( query, custom_search_engine_id="YOUR_GOOGLE_SEARCH_ENGINE_ID" num=2 ) -

Append the search result data to the ChatBot message stack. This will provide the subsequent chats with the LLM with the context of the search results. Note that if the WebpageAgent ran into an error when scraping the contents of the search results, the results list may be empty.

# Keep track of your sources, if you care. sources = [] if len(results) >= 1: for result in results: CHATBOT._append_message( role='search result', message=result.content ) sources.append(result.url) -

Submit the user's original prompt to the ChatBot to get the final answer.

response = CHATBOT.chat(message)

Conclusion

That's it! You now have a chatbot that can search the web to help it answer questions.

See the full demo application here.

As always, reach out at w (at) phaseai (dot) com if you want to collaborate. You can also follow PhaseLLM on Twitter.