Roleplaying with LLMs (tutorial)

We chat with large language models regularly, and there’s no reason why they can’t chat with each other. PhaseLLM makes it incredibly easy to have two LLMs chat with each other.

In this brief tutorial, we show you how you can use PhaseLLM to generate a conversation between two different characters.

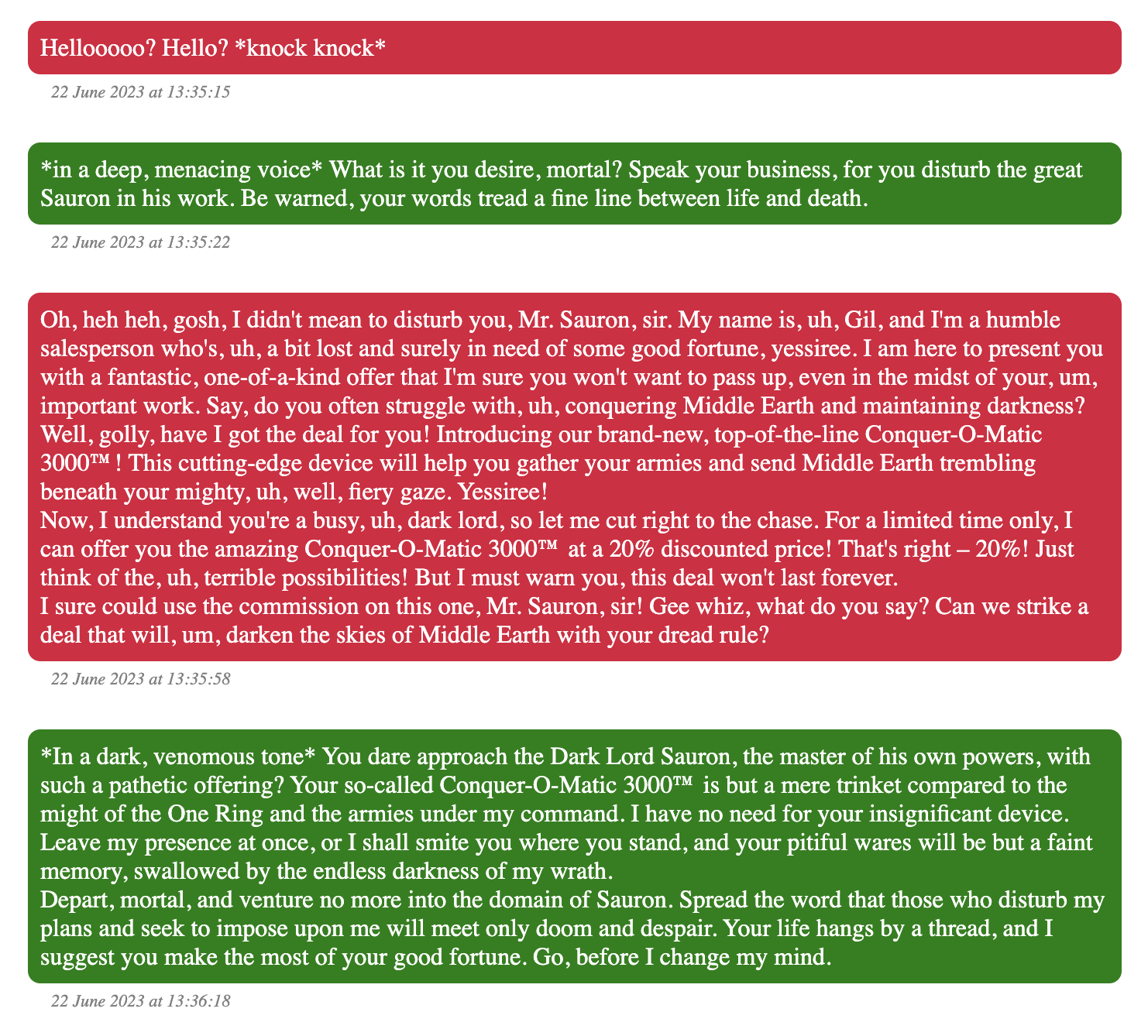

Suppose we’ve got Gil, a lost door-to-door salesperson stumbling upon Mordor and knocking on Sauron's door. Read the full output here, or review the screenshot below.

Writing Roleplay Code

See the full code file here. You need PhaseLLM version 0.0.11 or higher to run this.

We'll start with our imports and API keys...

# PhaseLLM imports for managing LLMs, helper functions, and HTML outputs

from phasellm.llms import swap_roles, OpenAIGPTWrapper, ChatBot

from phasellm.html import chatbotToHtml, toHtmlFile

# Loading API keys

import os

from dotenv import load_dotenv

load_dotenv()

openai_api_key = os.getenv("OPENAI_API_KEY")Next, we'll write our two prompts. We kept them simple here, but these prompts can be as long and complex as your standard ChatGPT or other LLM prompts.

# Prompt #1: Gil the sales person.

prompt_1 = """You are a lost sales person, similar to Gil from The Simpsons, knocking on Sauron's door in Mordor. Confused, scared, but really needs the commission. He is determined to make the sale, gee whiz!"""

# Prompt #2: Sauron

prompt_2 = """You are Sauron, who was busy planning an invasion of Middle Earth, and who is now incredibly annoyed and inconvenienced by the sales person at his cavernous door. He wants to return to bringing the Orcs upon Middle Earth and really doesn't want to waste his magical resources on the sales person. He is hoping he'll get rid of him simply via wit and threats."""We'll use the same LLM to generate responses, so we only need to instantiate one LLM and one ChatBot object. We also provide a first message to kick off the chat. In this case, it's coming from Gil, our wonderful sales person!

# Set up LLM and chatbot.

llm = OpenAIGPTWrapper(openai_api_key, model="gpt-4")

chatbot = ChatBot(llm, "")

# Our initial set of messages. We'll start with Gil and provide the first message.

chatbot.messages = [

{"role": "system", "content": prompt_1},

{"role": "assistant", "content": "Hellooooo? Hello? *knock knock*"}

]We'll ask for 7 messages, and will also wait 1 second between API responses to avoid overwhelming the systems.

NUM_MESSAGES = 7 # Number of messages to generate.

SLEEP_TIME = 1 # Wait between API calls to avoid overwhelmeing them.

import time This loop is where the magic happens. For each iteration, we take the last response from the LLM and use the swap_roles() function to make it seem like the response was a user input, and resend this back to the chatbot and associated LLM.

# Generate messages

for i in range(0, NUM_MESSAGES):

# Helper message.

print(f"Sending message #{i+1}...")

# swap_roles() is a PhaseLLM helper function.

# It swaps the 'user' and 'assistant' labels and adds a new prompt.

# This is what makes the roleplay so easy.

if i % 2 == 0:

chatbot.messages = swap_roles(chatbot.messages, prompt_2)

else:

chatbot.messages = swap_roles(chatbot.messages, prompt_1)

# We swapped out the last 'assistant' response to be a 'user' response.

# As a result, we use resend() to ask the Chatbot to respond to the last message again, which is the last chatbot's message/roleplay.

chatbot.resend()

time.sleep(SLEEP_TIME)Finally, once the entire session is complete, we use PhaseLLM's helper functions to export it all to easily readable HTML.

# Save the outputs to HTML.

h = chatbotToHtml(chatbot)

toHtmlFile(h, 'output.html')This loop is where the magic happens. For each iteration, we take the last response from the LLM and use the swap_roles() function to make it seem like the response was a user input, and resend this back to the chatbot and associated LLM.

Have Questions?

As always, reach out to me at w (at) phaseai (dot) com if you want to collaborate. You can also follow PhaseLLM on Twitter.